CineLOG: A Large-Scale Dataset for Controllable Cinematic Video Synthesis

Abstract

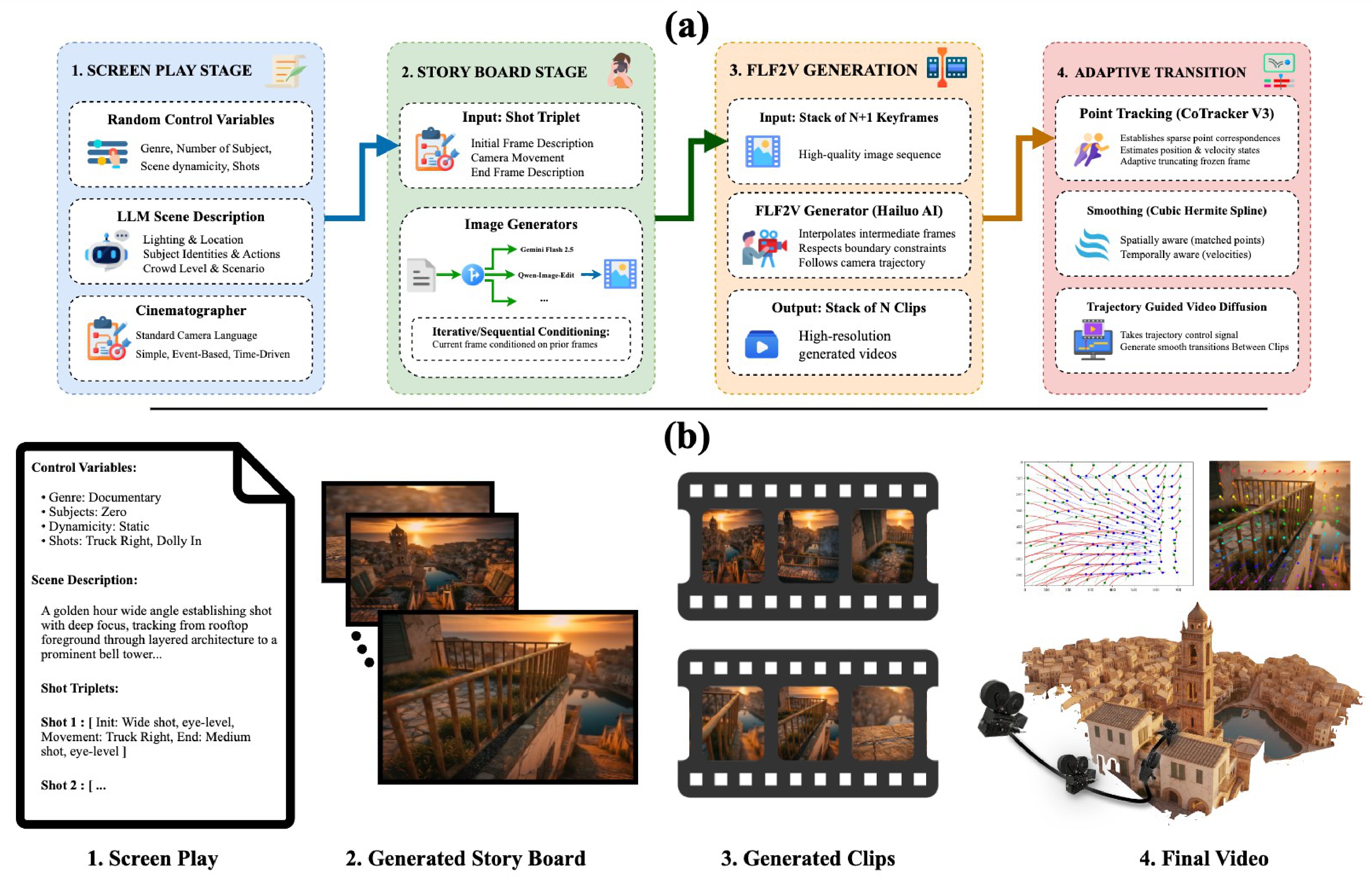

To address this, we introduce CineLOG, a new dataset of 5,000 high-quality, balanced, and uncut video clips. Each entry is annotated with a detailed scene description, explicit camera instructions based on a standard cinematic taxonomy, and genre label, ensuring balanced coverage across 17 diverse camera movements and 15 film genres. We also present our novel pipeline designed to create this dataset, which decouples the complex text-to-video (T2V) generation task into four easier stages with more mature technology. Extensive human evaluations show that our pipeline significantly outperforms SOTA end-to-end T2V models in adhering to specific camera and screenplay instructions.

The CineLOG Dataset

CineLOG addresses the limitations of existing datasets by providing balanced, taxonomy-aligned coverage of all camera primitives. It contains long, uncut, public, and diverse high-fidelity scenes across various genres and subject counts.

Key Features

- 5,000 high-quality video clips

- 17 distinct camera movements (Pan, Tilt, Dolly, etc.)

- 15 film genres (Action, Drama, Sci-Fi, etc.)

- Rich annotations: Scene description, init/end view, subject count.

Comparison to SOTA

Unlike real-world datasets (RealEstate10K, CameraBench) which are noisy or imbalanced, and synthetic datasets (LensCraft) which lack realism, CineLOG bridges the gap by offering photorealism with perfect balance.

Methodology: Decoupled Generation Pipeline

We leave end-to-end T2V for camera controllability and factor the problem into four cooperating modules. This architecture aligns with industry practices of key-framing and storyboard creation.

1. Screen Play & Story Board

We utilize GPT-5 mini as a storyteller to generate balanced cinematic parameters. We then use a dynamic routing strategy with SOTA T2I models (like Gemini 2.5 Flash and Qwen LoRA) to generate high-fidelity keyframes that respect specific camera instructions (e.g., Dolly In vs. Zoom In).

2. Trajectory Guided Transition

To enable coherent, multi-shot sequences, we introduce a novel Trajectory Guided Transition Module. Standard interpolation often fails at the "cut" between clips, causing jarring jumps or frozen frames. Our method constructs a smooth, C1 continuous spatio-temporal path using Cubic Hermite Splines to guide the generation.

Experiments & Results

We compared our pipeline against SOTA end-to-end T2V models including Hailuo 2.3 and Veo 3.2. While these models produce visually realistic clips, they often fail to follow specific cinematic instructions (e.g., in Figure 4).

Human Evaluation Results

| Metric | CineLOG (Ours) | Hailuo 2.3 | Veo 3.2 |

|---|---|---|---|

| Screenplay Adherence | 7.6 | 6.2 | 5.6 |

| Camera Adherence | 8.3 | 5.5 | 3.8 |

| Shot Count Correctness (%) | 91.2% | 36.2% | 41.3% |

Qualitative Comparisons: Camera Control

Explore different scenes to see how CineLOG adheres to camera movements and narrative details compared to baseline models.

CineLOG (Ours)

Hailuo 2.3

Veo 3.2

Qualitative Comparisons: Interpolation Gallery

Compare the smoothness of transitions between keyframes. We compare Simple Concatenation, a Blind Interpolator (no tracking), a Fixed Window approach, and our Adaptive Window method.

Simple Concatenation

Blind Interpolator

Fixed Window

Trajectory Aware (Ours)

Citation

If you find our work or dataset useful, please consider citing:

@article{CineLOG2025,

title={CineLOG: A Large-Scale Dataset and Pipeline for Controllable Cinematic Video Synthesis},

author={Anonymous},

journal={-},

year={2025}

}